By Geoff Ballew | Onsemi

Almost all cameras rely on an image sensor to convert light in a scene into an electronic image—hence their popularity. However, there are many types of image sensors, each with different attributes and features, and designers need to be familiar with them before choosing a camera for a specific application.

Cameras are proliferating on vehicles, with some luxury car models having a dozen or more. As automakers continue the trend of adding more sensors for safety functions, they face a challenge that each camera costs money and takes up space. So, they look for solutions such as a single camera that is capable of capturing images optimized for both human vision and machine vision. This is also a challenge because image quality for human vision requires different trade-offs than image quality for machine vision.

Human-Viewing

The human perception system has a different response to light intensity differences between pixels than a machine vision algorithm has. Humans have a non-linear response to visual intensity meaning that twice as many photons hitting your eye doesn’t appear twice as bright to you. This requires that a camera image intended for human viewing be tuned to map its dynamic range so that it maximizes the detail in brighter areas and darker areas as perceived by a human. Also, we are sensitive to colors that “look right” and flickering from LED light sources (which are becoming much more common) so a camera that distorts colors, even if the image is sharp and otherwise high quality, will be annoying to humans. For passive safety systems that help a driver, such as a rear-view camera, human users also have an advantage over machine vision systems in that the driver will automatically notice if the image appears to be corrupted, and in that case, the driver will not rely on the camera. This becomes an inconvenience rather than a major safety issue–the benefit of the camera is lost, but the driver is aware of it, so will not rely on the camera image until conditions change.

Machine Vision

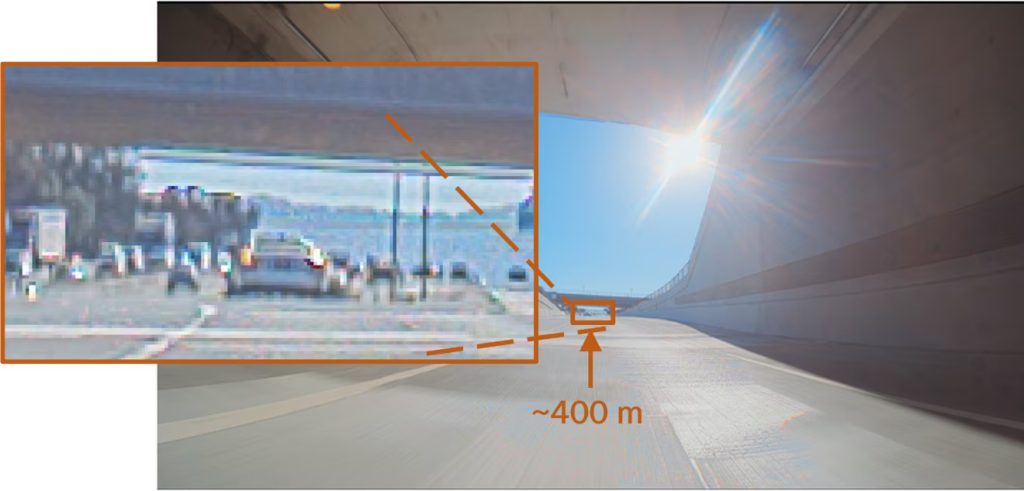

In contrast to a human viewer, an automated system using machine vision will look at the mathematical value of each pixel in the image, so it has a linear response to the number of photons recorded by each pixel. This means it must be tuned to output an image that maps the measured pixel values differently than for human viewing. Additionally, a machine vision system must be programmed or have special error detection hardware to be able to detect image defects. If the system does not have that, it may not work correctly, but it won’t notify the human driver of the car that its functionality is compromised, or even not functioning at all! For an active safety system like Automated Emergency Braking….failures are bad…false positives will cause the system to apply the brake when no collision hazard exists, and false negatives will mean the system does not apply the brake when a hazard does exist. If a human driver is relying on that system to help them, it is important info that it is not fully functional, but it may not warn the driver it is compromised. Systems that do alert the driver that they are compromised or “unavailable” typically rely on special hardware features for error or fault detection in the sensor. There are industry standards for these features such as ASIL, which stands for Automotive Safety Integrity Level, and an ASIL-capable sensor will have features that detect and report faults for improved safety. These two reasons are clear examples why sensors for machine vision need different configurations than sensors for human viewing.

Sensor Solutions for Viewing and Sensing with a Single Camera

For engineers designing a camera system intended for both human and machine vision capabilities, the good news is that sensors are available that have a super set of features covering both human and machine vision and also have the capability of outputting two simultaneous data streams where one stream is optimized for human viewing and the other for machine vision. This enables automakers to use a single camera in a specific location, which minimizes space in the vehicle as well as reduces system cost, and still get application-optimized images for both workloads.