Ben Miller, PRODUCT MARKETING MANAGER, Keysight Technologies

Although warehouses filled with acres of buzzing server racks may not seem like the most likely places to find exciting new technology, data centers play a crucial role in the emerging technologies of tomorrow. Industry 4.0, artificial intelligence (AI), virtual reality (VR), metaverse, and Internet-of-Things (IoT) are all high-demand applications which rely on data centers to provide powerful computing resources.

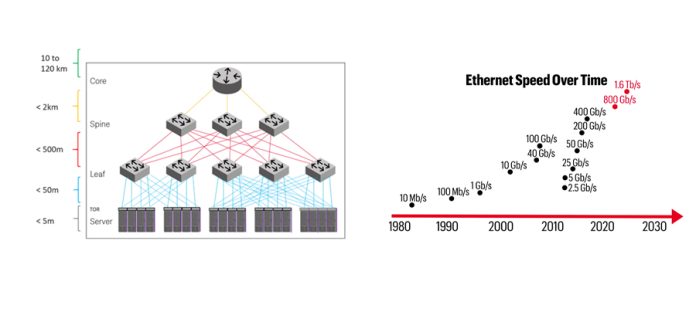

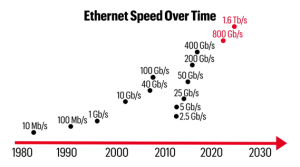

For the last three decades, modern society has increasingly depended on data center networks. Ethernet speeds have increased dramatically, transmitting high volumes of data that the modern world uses to communicate and make complex decisions. Despite many recent innovations in the high-speed networking space, data speeds need to keep up with the exponential demand. Further innovation in high-speed networking will enable 800 gigabits per second (800G) and 1.6 terabits per second (1.6T) speeds, opening the door to a more connected world.

CLOUD COMPUTING, EDGE COMPUTING, AND INTELLIGENCE FACTORIES

A significant trend in data in recent years has been offloading data processing to external servers. Hyperscale data centers have popped up globally to support cloud computing networks. Smaller data centers have now become more common for time-sensitive applications using localized edge computing . Data centers have evolved beyond the Internet, becoming “intelligence factories” that provide powerful computing resources for high-demand applications.

In an Internet application, individual computers (clients) connect to a modem or router, which sends data to a server elsewhere. Cloud and edge computing work similarly: users can log into a server that hosts more powerful programs than they can run on their own hardware. It is easy to see why so many emerging technologies could benefit from offloading processing to external servers.

Figure 1: Autonomous vehicles are a prime example of the benefits of offloading data to edge computing

Edge computing is interesting for its impact on autonomous vehicles (AVs). Safely operating an AV requires thousands of urgent decisions. Instead of hosting a supercomputer in the car to process the sensor data or sending it to a distant data center, AVs utilize smaller servers on the “edge,” closer to the client, dramatically reducing latency by processing locally. Data from other vehicles and road infrastructure are part of an ecosystem of clients which feed a central server (this technology is called “vehicle-to-everything” or V2X). The server can make the best decisions for all clients, resulting in intersections without stoplights or collisions.

Cloud computing applications are numerous and diverse, from controlling factory robots to maximize efficiency, hosting thousands of VR users in the metaverse, or hosting remote AI programs like ChatGPT and DALL-E. In most of these applications, transmitting and processing data instantly is key. Timing is essential, and latency requirements differentiate cloud and edge computing applications. Network lag in the metaverse could make users motion sick, but lag in a V2X ecosystem could cause a deadly collision.

Today’s 400G data centers can support streaming 4K video and large conference calls, but they are not yet fast enough for many emerging applications. As the sheer volume of data increases worldwide, 800G may not even be fast enough to process it. The networking industry is already looking toward 1.6T.

INSIDE THE DATA CENTER

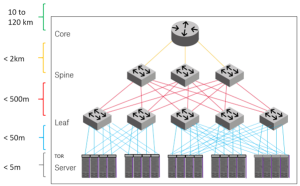

To understand 1.6T research and development, one first needs to understand data centers. Data centers are organized around a core router, fed by a network of switches that provide connections between each row and rack of servers. Each server rack features a top-of-rack (TOR) switch that routes data and delegates processes to specific servers. Copper or fiber optic cables connect the backplane of each server with optical modules that perform the electro-optic conversion.

Figure 2: A typical data center structure featuring core, spine, and leaf switches connecting each server.

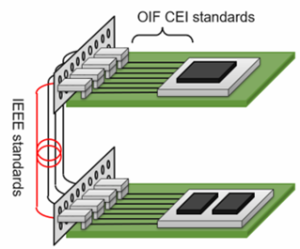

Physical layer transceivers follow Institute of Electrical and Electronics Engineers (IEEE) and the Optical Internetworking Forum (OIF) standards. These two groups define the interoperability of each interface, including the die-to-die connections, the chip-to-module and chip-to-chip interfaces, and the backplane cables. The most recent standards, as of this writing, are IEEE 802.3ck, which defines 100G, 200G and 400G networks using multiple 100 Gb/s lanes, and OIF CEI-112G, a collection of standards relating to the transmission of data at 112 Gb/s per lane.

Figure 3: Switch silicon, module, and backplane connections defined by OIF CEI and IEEE standards. Image courtesy Alphawave IP.

HISTORY OF DATA CENTER SPEEDS

In 1983, when the first IEEE 802.3 standard was released, Ethernet speeds were only 10 Mb/s. Over the last few decades, Ethernet speeds have grown dramatically through continuous innovation, reaching 400 Gb/s aggregated by four 56 GBaud (GBd) PAM4 lanes. The industry expects to double the speed twice over the next couple of years to keep up with bandwidth demand. How can developers double Ethernet speeds twice over the next few years? Is it physically possible to send that much data over a channel that quickly?

Figure 4: Ethernet speeds timeline, from the first 10 Mb/s IEEE 802.3 standard to the upcoming 800G/1.6T IEEE 802.3df standard.

Now you understand how important data centers are to emerging technologies and why the IEEE and OIF are continuously working on increasing networking speeds to meet demand. In Part Two: Challenges and Innovations for 1.6T Data Center Networks, I’ll discuss the research and development on 800G and 1.6T networks. We’ll look at options that the industry is considering to increase data network speeds and the technical tradeoffs of each. I will also share some insights on when we might see these ultra-fast hyperscale data centers become a reality.

To learn more, take the 1.6T Ethernet in the Data Center course on Keysight University to get exclusive insights from a variety of industry experts from across the networking ecosystem.