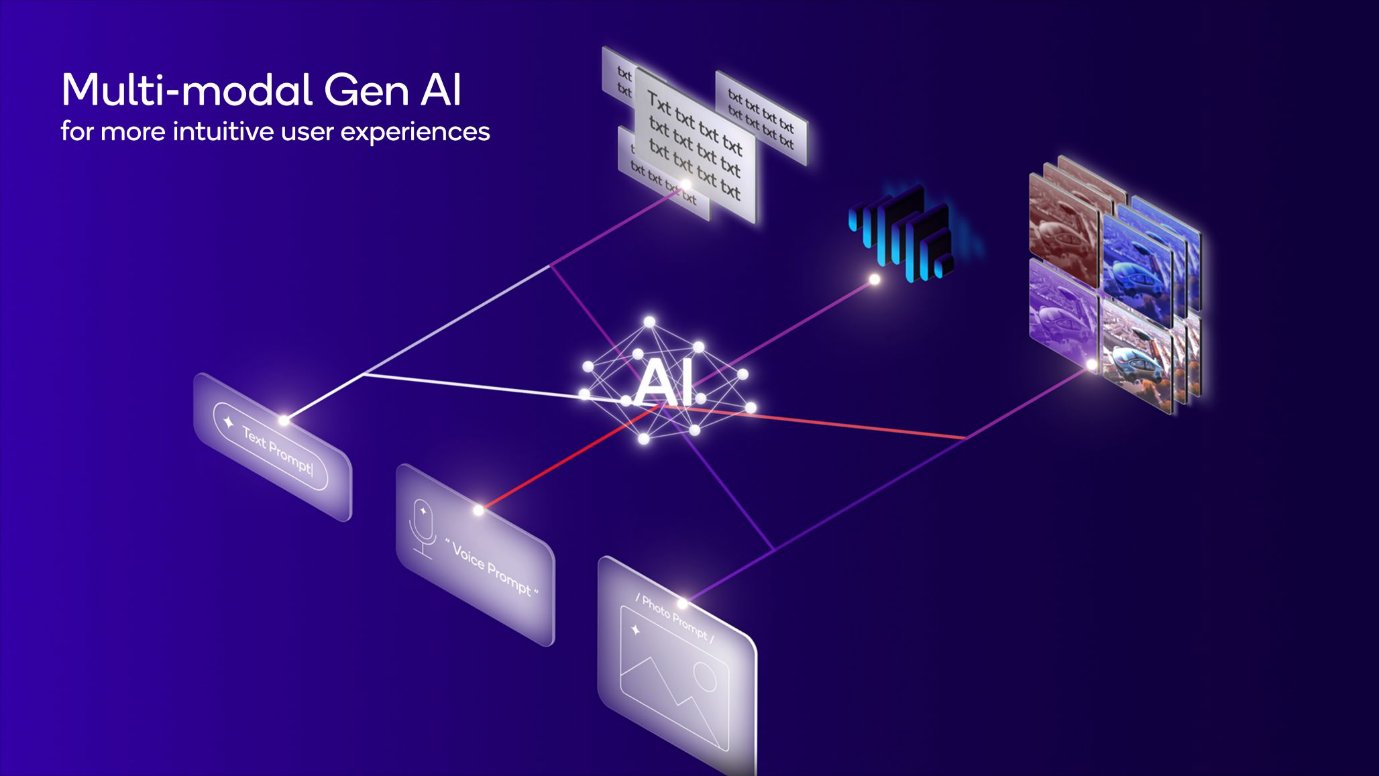

Leverage additional modalities in generative AI models to enable necessary advancements for contextualization and customization across use cases

A constant desire in user experience is improved contextualization and customization. For example, consumers want devices to automatically use contextual information and custom preferences from their smartphones’ data and sensors to make experiences more intuitive and seamless, like suggesting restaurants for a meal based on current location, time of day and preferred food choices — leading to a delightful experience.

Although generative artificial intelligence (AI) is already showing emergent and transformational capabilities, there is still much room for improvement. Technologies like multimodal generative AI can address the trend toward more contextual and customized experiences in generative AI.

Multimodal AI models better understand the world

Large language models (LLMs) accomplish amazing feats for models that were trained purely on text. How much better would they be with different forms of information that contain additional knowledge?

Humans learn a lot through language and reading text, but we also create our understanding of the world through all our senses and interactions:

Our eyes allow us to see what happens when a ball rolls on slanted floor and how it disappears when it goes behind a couch.

Our ears can detect emotion in voices or the direction of a siren moving toward you.

Our touch and interaction with the world teach us how hard to squeeze a Styrofoam coffee cup with our hand and how to walk without falling.

The list goes on.

Although language can describe almost all these things, it may not do it as well or as efficiently as other modalities.

Just as humans use a variety of senses to learn, it’s logical that generative AI can use other modalities in addition to text to learn: That’s where multimodal generative AI models come in.

These models can be trained on a variety of modalities from text, images, voice, audio, video and 3D to light detection and ranging (LIDAR), radio frequency (RF) and just about any sensor data.

By using all these sensors, fusing the data and having a more holistic understanding of the world, multimodal generative AI models can provide better answers. AI researchers have done just that — they trained large multimodal models (LMMs) in the cloud on a variety of data from different modalities, and the models are “smarter.” OpenAI’s GPT-4V and Google’s Gemini are examples of these LMMs.

What does this buy you? LMMs, for example, can act as a universal assistant that takes in any input in any modality and provide much improved answers to a much broader set of questions. Asking whether you can park a car based on a complicated parking sign or how to fix a broken dishwasher based on a vibrating noise are a couple of examples of what could be possible.

The next step is deployment of the LMMs by running inference: While it is possible to run this in the cloud, running generative AI inference on edge devices provides many benefits, such as privacy, reliability, cost efficiency and immediacy.

For example, the source of the sensors and corresponding sensor data are the edge devices themselves, so it is more cost-efficient and scalable to process and keep the data on device.

On-device LLMs can now see

Qualcomm AI Research recently demonstrated the world’s first multimodal LLM on an Android phone. We showed Large Language and Vision Assistant (LLaVA), a more than 7 billion-parameter LMM that can accept multiple types of data inputs, including text and images, and generate multi-turn conversations about an image. LLaVA ran on a reference design powered by Snapdragon 8 Gen 3 mobile platform at a responsive token rate completely on device through full-stack AI optimization.

LMMs with language understanding and visual comprehension enable many use cases, such as identifying and discussing complex visual patterns, objects and scenes.

For example, a visual AI assistant could help the visually impaired to better understand and interact within their environment, thus improving their quality of life.

On-device LLMs can now hear

On a Windows PC powered by Snapdragon X Elite, we also recently showcased the world’s first on-device demonstration of a more than 7 billion-parameter LMM that can accept text and environmental audio inputs (e.g., music, sound of traffic, etc.) and then generate multi-turn conversations about the audio.

The additional context from audio can help the LMM to provide better answers to prompts from the user. We are excited to see visual, voice and audio modalities already being enabled through on-device LMMs and look forward to even more modalities being added.

More on-device generative AI technology advancements to come

Making AI models that better understand contextual information is necessary for better answers and improved experiences, and multimodal generative AI is just one of the latest transformative technologies coming soon to your next device.