Face recognition has gained widespread acceptance for authenticating access to smartphones, but attempts to apply this technology more broadly have fallen short in other areas despite its effectiveness and ease of use. Along with the technical challenges of implementing reliable, low-cost machine learning solutions, developers must address user concerns around the reliability and privacy of conventional face recognition methods that depend on cloud connections that are vulnerable to spoofing.

This article discusses the difficulty of secure authentication before introducing a hardware and software solution from NXP Semiconductors that addresses the issues. It then shows how developers without prior experience in machine learning methods can use the solution to rapidly implement offline anti-spoofing face recognition in a smart product.

The challenges of secure authentication for smart products

In addressing growing concerns about the security of smart products, developers have found themselves left with few tenable options for reliably authenticating users looking for quick yet secure access. Traditional methods rely on multifactor authentication methods that rest on some combination of the classical three factors of authentication: “Something you know”, such as a password; “Something you have”, such as a physical key or key card; and “Something you are”, which is typically a biometric factor such as a fingerprint or iris. Using this approach, a strongly authenticated door lock might require the user to enter a passcode, use a key card, and further provide a fingerprint to unlock the door. In practice, such stringent requirements are bothersome or simply impractical for consumers who need to frequently and easily re-authenticate themselves with a smartphone or other routinely used device.

The use of face recognition has significantly simplified authentication for smartphone users, but smartphones possess some advantages that might not be available in every device. Besides the significant processing power available in leading-edge smartphones, always-on connectivity is a fundamental requirement for delivering the sophisticated range of services routinely expected by their users.

For many products that require secure authentication, the underlying operating platform will typically provide more modest computing resources and more limited connectivity. Face recognition services from the leading cloud-service providers shift the processing load to the cloud, but the need for robust connectivity to ensure minimal response latency might impose requirements that remain beyond the capabilities of the platform. Of equal or more concern to users, transmitting their image across public networks for processing and potentially storing it in the cloud raises significant privacy issues.

Using NXP Semiconductors’ i.MX RT106F processors and associated software, developers can now implement offline face recognition that directly addresses these concerns.

Hardware and software for spoof-proof offline face recognition

A member of the NXP i.MX RT1060 Crossover microcontroller (MCU) family, the NXP i.MX RT106F series is specifically designed to support easy integration of offline face recognition into smart home devices, consumer appliances, security devices, and industrial equipment. Based on an Arm Cortex-M7 processor core, the processors run at 528 megahertz (MHz) for the industrial grade MIMXRT106FCVL5B, or 600 MHz for commercial grade processors such as the MIMXRT106FDVL6A and MIMXRT106FDVL6B.

Besides supporting a wide range of external memory interfaces, i.MX RT106F processors include 1 megabyte (Mbyte) of on-chip random access memory (RAM) with 512 kilobytes (Kbyte) configured as general purpose RAM, and 512 Kbytes that can be configured either as general purpose RAM or as tightly coupled memory (TCM) for instructions (I-TCM) or data (D-TCM). Along with on-chip power management, these processors offer an extensive set of integrated features for graphics, security, system control, and both analog and digital interfaces typically needed to support consumer devices, industrial human machine interfaces (HMIs), and motor control (Figure 1).

Figure 1: NXP Semiconductor’s i.MX RT106F processors combine a full set of functional blocks needed to support face recognition for consumer, industrial and security products. (Image source: NXP)

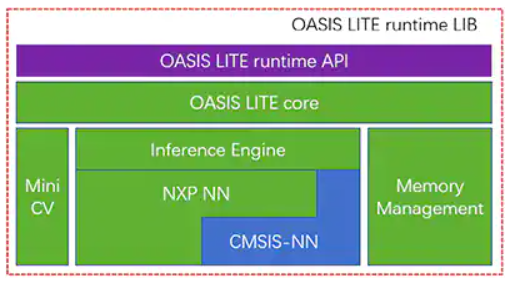

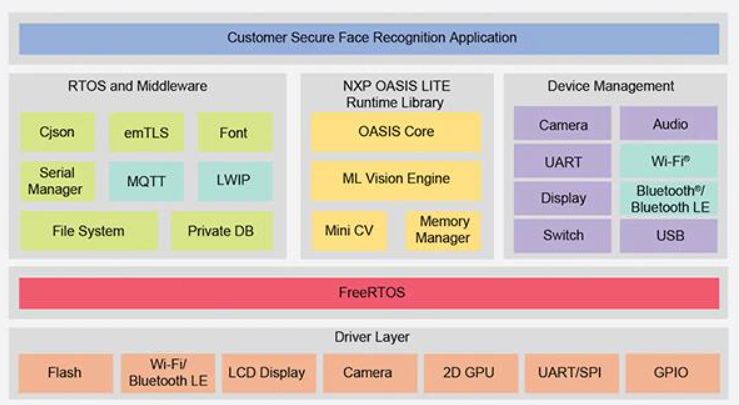

Although similar to other i.MX RT1060 family members, i.MX RT106F processors bundle in a runtime license for NXP’s Oasis Lite face recognition software. Designed to speed inference on this class of processors, the Oasis Lite runtime environment performs face detection, recognition, and even limited emotion classification using neural network (NN) inference models running on an inference engine and MiniCV—a stripped-down version of the open source OpenCV computer vision library. The inference engine builds on an NXP NN library and the Arm Cortex Microcontroller System Interface Standard NN (CMSIS-NN) library (Figure 2).

Figure 2: The NXP Oasis Lite runtime library includes an Oasis Lite core that uses MiniCV and an NXP inference engine built on neural network libraries from NXP and Arm. (Image source: NXP)

The inference models reside on the i.MX RT106F platform, so face detection and recognition execute locally, unlike other solutions that depend on cloud-based resources to run the machine learning algorithms. Thanks to this offline face recognition capability, designers of smart products can ensure private, secure authentication despite low bandwidth or spotty Internet connectivity. Furthermore, authentication occurs quickly with this hardware and software combination, requiring less than 800 milliseconds (ms) for the processor to wake from low-power standby and complete face recognition.

Used with the i.MX RT106F processor, the Oasis Lite runtime simplifies implementation of offline face recognition for smart products, but the processor and runtime environment are of course only part of a required system solution. Along with a more complete set of system components, an effective authentication solution requires imaging capability that can mitigate a type of security threat called presentation attacks. These attacks attempt to spoof face recognition authentication by using photographs. For developers looking to rapidly deploy face-based authentication in their own products, the NXP SLN-VIZNAS-IOT development kit and associated software provide a ready-to-use platform for evaluation, prototyping and development of offline, anti-spoofing face recognition.

Complete secure systems solution for face recognition

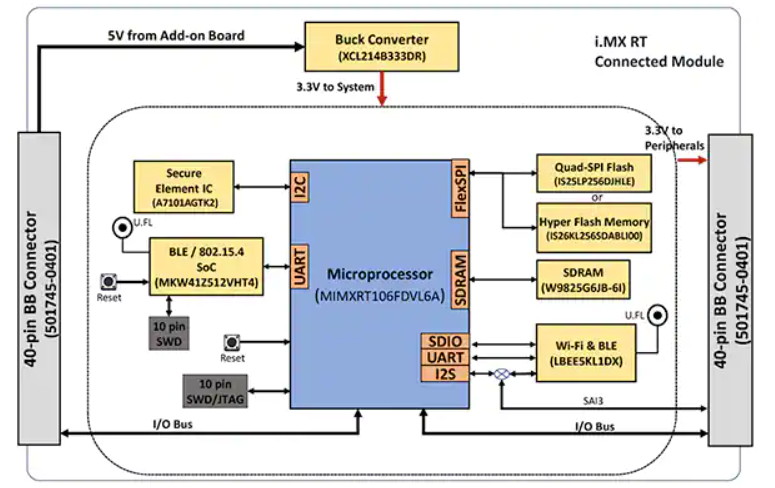

As with most advanced processors, the i.MX RT106F processor requires only a few additional components to provide an effective computing platform. The NXP SLN-VIZNAS-IOT kit completes the design by integrating the i.MX RT106F with additional devices to provide a complete hardware platform (Figure 3).

Figure 3: The NXP SLN-VIZNAS-IOT kit includes a connected module that provides a robust connected system platform needed to run authentication software. (Image source: NXP)

The kit’s connected module board combines an NXP MIMXRT106FDVL6A i.MX RT106F processor, an NXP A71CH secure element, and two connectivity options—NXP’s MKW41Z512VHT4 Kinetis KW41Z Bluetooth low energy (BLE) system-on-chip (SoC) and Murata Electronics’ LBEE5KL1DX-883 Wi-Fi/Bluetooth module.

To supplement the processor’s on-chip memory, the connected module adds Winbond Electronics’ W9825G6JB 256 megabit (Mbit) synchronous dynamic RAM (SDRAM), an Integrated Silicon Solution. Inc. (ISSI) IS26KL256S-DABLI00 256 Mbit NOR flash, and ISSI’s IS25LP256D 256 Mbit Quad Serial Peripheral Interface (SPI) device.

Finally, the module adds a Torex Semiconductor XCL214B333DR buck converter to supplement the i.MX RT106F processor’s internal power management capabilities for the additional devices on the connected module board.

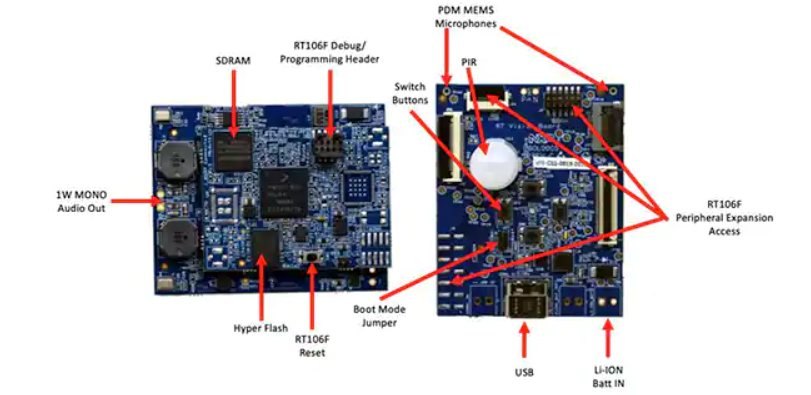

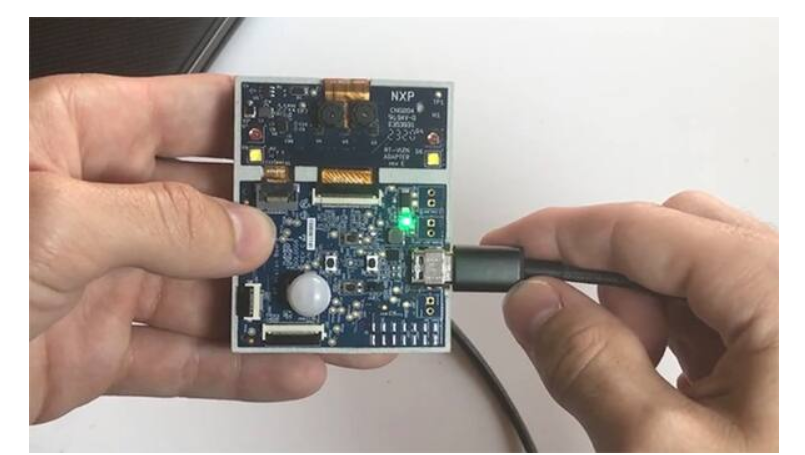

The connected module in turn mounts on a vision application board that combines a Murata Electronics IRA-S210ST01 passive infrared (PIR) sensor, motion sensor, battery charger, audio support, light emitting diodes (LEDs), buttons, and interface connectors (Figure 4).

Figure 4: In the NXP SLN-VIZNAS-IOT kit, the connected module (left) is attached to the vision application board to provide the hardware foundation for face recognition. (Image source: NXP)

Along with this system platform, a face recognition system design clearly requires a suitable camera sensor to capture an image of the user’s face. As mentioned earlier, however, concerns about presentation attacks require additional imaging capabilities.

Mitigating presentation attacks

Researchers have for years explored different presentation attack detection (PAD) methods designed to mitigate attempts such as using latent fingerprints or images of a face to spoof biometric-based authentication systems. Although the details are well beyond the scope of this article, PAD methods in general use deep analysis of the quality and characteristics of the biometric data captured as part of the process, as well as “liveness” detection methods designed to determine if the biometric data was captured from a live person. Underlying many of these different methods, deep neural network (DNN) models play an important role not only in face recognition, but also in identifying attempts to spoof the system. Nevertheless, the imaging system used to capture the user’s face can provide additional liveness detection support.

For the SLN-VIZNAS-IOT kit, NXP includes camera modules that contain a pair of ON Semiconductor’s MT9M114 image sensors. Here, one camera is equipped with a red, green, blue (RGB) filter, and the other camera is fitted with an infrared (IR) filter. Attached through camera interfaces to the vision application board, the RGB camera generates a normal visible light image, while the IR camera captures an image that would be different for a live person compared to an image of the person. Using this liveness detection approach along with its internal face recognition capability, the SLN-VIZNAS-IOT kit provides offline, anti-spoofing face recognition capability in a package measuring about 30 x 40 millimeters (mm) (Figure 5).

Figure 5: The NXP SLN-VIZNAS-IOT hardware kit integrates a dual camera system for liveness detection (top) and a vision application board (bottom) with a connected module to provide a drop-in solution for offline face recognition with anti-spoofing capability. (Image source: NXP)

Getting started with the SLN-VIZNAS-IOT kit

The NXP SLN-VIZNAS-IOT kit comes ready-to-use with built-in face recognition models. Developers plug in a USB cable and touch a button on the kit to perform a simple manual face registration using the preloaded “elock” application and the accompanying mobile app (Figure 6, left). After registration, the mobile app will display a “welcome home” message and “unlocked” label when the kit authenticates the registered face (Figure 6, right).

Figure 6: The NXP SLN-VIZNAS-IOT hardware kit works out of the box, utilizing a companion app to register a face (left) and recognize registered faces (right). (Image source: NXP)

The kit’s Oasis Lite face recognition software processes models from its database of up to 3000 RGB faces with a recognition accuracy of 99.6%, and up to 100 IR faces with an anti-spoofing accuracy of 96.5%. As noted earlier, the NXP hardware/software solution needs less than one second (s) to perform face detection, image alignment, quality check, liveness detection, and recognition over a range from 0.2 to 1.0 meters (m). In fact, the system supports an alternate “light” inference model capable of performing this same sequence in less than 0.5 s but supports a smaller maximum database size of 1000 RGB faces and 50 IR faces.

Building custom face recognition applications

Used as is, the NXP SLN-VIZNAS-IOT kit lets developers quickly evaluate, prototype and develop face recognition applications. When creating custom hardware solutions, the kit serves as a complete reference design with full schematics and a detailed bill of materials (BOM). For software development, programmers can use the NXP MCUXpresso integrated development environment (IDE) with FreeRTOS support and configuration tools. For this application, developers simply use NXP’s online MCUXpresso SDK Builder to configure their software development environment with NXP’s VIZNAS SDK, which includes the NXP Oasis Lite machine learning vision engine (Figure 7).

Figure 7: NXP provides a comprehensive software environment that executes the NXP Oasis Lite runtime library and utility middleware on the FreeRTOS operating system. (Image source: NXP)

The software package includes complete source code for the operating environment as well as the elock sample application mentioned earlier. NXP does not provide source code for its proprietary Oasis Lite engine or for the models. Instead, developers work with the Oasis Lite runtime library using the provided application programming interface (API), which includes a set of intuitive function calls to perform supported operations. In addition, developers use a provided set of C defines and structures to specify various parameters including image size, memory allocation, callbacks and enabled functions used by the system when starting up the Oasis Lite runtime environment (Listing 1).

typedef struct {

//max input image height, width and channel, min_face: minimum face can be detected

int height;

int width;

//only valid for RGB images; for IR image, always GREY888 format

OASISLTImageFormat_t img_format;

OASISLTImageType_t img_type;

//min_face should not smaller than 40

int min_face;

/*memory pool pointer, this memory pool should only be used by OASIS LIB*/

char* mem_pool;

/*memory pool size*/

int size;

/*output parameter,indicate authenticated or not*/

int auth;

/*callback functions provided by caller*/

InfCallbacks_t cbs;

/*what functions should be enabled in OASIS LIB*/

uint8_t enable_flags;

/*only valid when OASIS_ENABLE_EMO is activated*/

OASISLTEmoMode_t emo_mode;

/*false accept rate*/

OASISLTFar_t false_accept_rate;

/*model class */

OASISLTModelClass_t mod_class;

} OASISLTInitPara_t;

Listing 1: Developers can modify software execution parameters by modifying the contents of structures such as the one shown here for Oasis Lite runtime initialization. (Code source: NXP)

The elock sample application code demonstrates the key design patterns for launching Oasis as a task running under FreeRTOS, initializing the environment and entering its normal run stage. In the run stage, the runtime environment operates on each frame of an image, executing the provided callback functions associated with each event defined in the environment (Listing 2).

typedef enum {

/*indicate the start of face detection, user can update frame data if it is needed.

* all parameter in callback parameter is invalid.*/

OASISLT_EVT_DET_START,

/*The end of face detection.

*if a face is found, pfaceBox(OASISLTCbPara_t) indicated the rect(left,top,right,bottom point value)

*info and landmark value of the face.

*if no face is found,pfaceBox is NULL, following event will not be triggered for current frame.

*other parameter in callback parameter is invalid */

OASISLT_EVT_DET_COMPLETE,

/*Face quality check is done before face recognition*/

OASISLT_EVT_QUALITY_CHK_START,

OASISLT_EVT_QUALITY_CHK_COMPLETE,

/*Start of face recognition*/

OASISLT_EVT_REC_START,

/*The end of face recognition.

* when face feature in current frame is gotten, GetRegisteredFaces callback will be called to get all

* faces feature registered and OASIS lib will try to search this face in registered faces, if this face

* is matched, a valid face ID will be set in callback parameter faceID and corresponding simularity(indicate

* how confidence for the match) also will be set.

* if no face match, a invalid(INVALID_FACE_ID) will be set.*/

OASISLT_EVT_REC_COMPLETE,

/*start of emotion recognition*/

OASISLT_EVT_EMO_REC_START,

/*End of emotion recognition, emoID indicate which emotion current face is.*/

OASISLT_EVT_EMO_REC_COMPLETE,

/*if user set a registration flag in a call of OASISLT_run and a face is detected, this two events will be notified

* for auto registration mode, only new face(not recognized) is added(call AddNewFace callback function)

* for manu registration mode, face will be added forcely.

* for both cases, face ID of new added face will be set in callback function */

OASISLT_EVT_REG_START,

/*when registration start, for each valid frame is handled,this event will be triggered and indicate

* registration process is going forward a little.

* */

OASISLT_EVT_REG_IN_PROGRESS,

OASISLT_EVT_REG_COMPLETE,

OASISLT_EVT_NUM

} OASISLTEvt_t;

Listing 2: The Oasis Lite runtime recognizes a series of events documented as an enumerated set in the Oasis Lite runtime header file. (Code source: NXP)

The sample application can provide developers with step by step debug messages describing the results associated with each event processed by the event handler (EvtHandler). For example, after the quality check completes

(OASISLT_EVT_QUALITY_CHK_COMPLETE), the system prints out debug messages describing the result, and after face recognition completes (OASISLT_EVT_REC_COMPLETE), the system pulls the user id and name from its database for recognized faces and prints out that information (Listing 3).

Static void EvtHandler(ImageFrame_t *frames[], OASISLTEvt_t evt, OASISLTCbPara_t *para, void *user_data)

{

[code redacted for simplification]

case OASISLT_EVT_QUALITY_CHK_COMPLETE:

{

UsbShell_Printf(“[OASIS]:quality chk res:%d\r\n”, para->qualityResult);

pQMsg->msg.info.irLive = para->reserved[5];

pQMsg->msg.info.front = para->reserved[1];

pQMsg->msg.info.blur = para->reserved[3];

pQMsg->msg.info.rgbLive = para->reserved[8];

if (para->qualityResult == OASIS_QUALITY_RESULT_FACE_OK_WITHOUT_GLASSES ||

para->qualityResult == OASIS_QUALITY_RESULT_FACE_OK_WITH_GLASSES)

{

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[EVT]:ok!\r\n”);

}

else if (OASIS_QUALITY_RESULT_FACE_SIDE_FACE == para->qualityResult)

{

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[EVT]:side face!\r\n”);

}

else if (para->qualityResult == OASIS_QUALITY_RESULT_FACE_TOO_SMALL)

{

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[EVT]:Small Face!\r\n”);

}

else if (para->qualityResult == OASIS_QUALITY_RESULT_FACE_BLUR)

{

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[EVT]: Blurry Face!\r\n”);

}

else if (para->qualityResult == OASIS_QUALITY_RESULT_FAIL_LIVENESS_IR)

{

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[EVT]: IR Fake Face!\r\n”);

}

else if (para->qualityResult == OASIS_QUALITY_RESULT_FAIL_LIVENESS_RGB)

{

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[EVT]: RGB Fake Face!\r\n”);

}

}

break;

[code redacted for simplification]

case OASISLT_EVT_REC_COMPLETE:

{

int diff;

unsigned id = para->faceID;

OASISLTRecognizeRes_t recResult = para->recResult;

timeState->rec_comp = Time_Now();

pQMsg->msg.info.rt = timeState->rec_start – timeState->rec_comp;

face_info.rt = pQMsg->msg.info.rt;

#ifdef SHOW_FPS

/*pit timer unit is us*/

timeState->rec_fps++;

diff = abs(timeState->rec_fps_start – timeState->rec_comp);

if (diff > 1000000 / PIT_TIMER_UNIT)

{

// update fps

pQMsg->msg.info.recognize_fps = timeState->rec_fps * 1000.0f / diff;

timeState->rec_fps = 0;

timeState->rec_fps_start = timeState->rec_comp;

}

#endif

memset(pQMsg->msg.info.name, 0x0, sizeof(pQMsg->msg.info.name));

if (recResult == OASIS_REC_RESULT_KNOWN_FACE)

{

std::string name;

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[OASIS]:face id:%d\r\n”, id);

DB_GetName(id, name);

memcpy(pQMsg->msg.info.name, name.c_str(), name.size());

face_info.recognize = true;

face_info.name = std::string(name);

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[OASIS]:face id:%d name:%s\r\n”, id, pQMsg->msg.info.name);

}

else

{

// face is not recognized, do nothing

UsbShell_DbgPrintf(VERBOSE_MODE_L2, “[OASIS]:face unrecognized\r\n”);

face_info.recognize = false;

}

VIZN_RecognizeEvent(gApiHandle, face_info);

}

break;

Listing 3: As shown in this snippet from a sample application in the NXP software distribution, an event handler processes events encountered during the face recognition sequence. (Code source: NXP)

Besides supporting face recognition processing requirements, the NXP SLN-VIZNAS-IOT software is designed to protect the operating environment. To ensure runtime security, the system is designed to verify the integrity and authenticity of each signed image loaded into the system using a certificate stored in the SLN-VIZNAS-IOT kit’s filesystem. As this verification sequence starts with a trusted bootloader stored in read-only memory (ROM), this process provides a chain of trust for running application firmware. Also, because code signing and verification can slow development, this verification process is designed to be bypassed during software design and debug. In fact, the SLN-VIZNAS-IOT kit comes preloaded with signed images, but code signature verification is bypassed by default. Developers can easily set options to enable full code signature verification for production.

Along with the runtime environment and associated sample application code, NXP provides Android mobile apps with full java source code. One app, the VIZNAS FaceRec Manager, provides a simple interface for registering faces and managing users. Another app, the VIZNAS Companion app, allows users to provision the kit with Wi-Fi credentials using an existing Wi-Fi or BLE connection.

Conclusion

Face recognition offers an effective approach for authenticating access to smart products, but implementing it has typically required local high-performance computing or always-on high-bandwidth connectivity for rapid responses. It has also been a target of spoofing and is subject to concerns about user privacy.

As shown, a specialized processor and software library from NXP Semiconductors offer an alternative approach that can accurately perform offline face recognition in less than a second without a cloud connection, while mitigating spoofing attempts.