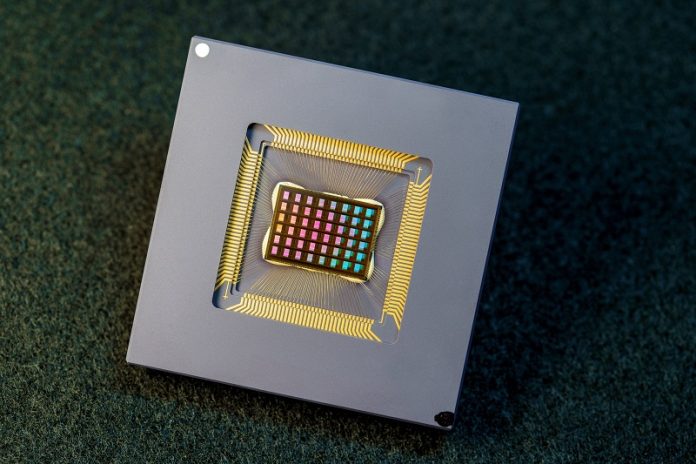

An international team of researchers has designed and built a chip that runs computations directly in memory and can run a wide variety of AI applications–all at a fraction of the energy consumed by computing platforms for general-purpose AI computing.

The NeuRRAM neuromorphic chip brings AI a step closer to running on a broad range of edge devices, disconnected from the cloud, where they can perform sophisticated cognitive tasks anywhere and anytime without relying on a network connection to a centralized server. Applications abound in every corner of the world and every facet of our lives and range from smartwatches, to VR headsets, smart earbuds, smart sensors in factories and rovers for space exploration.

The NeuRRAM chip is not only twice as energy efficient as the state-of-the-art “compute-in-memory” chips, an innovative class of hybrid chips that runs computations in memory, it also delivers results that are just as accurate as conventional digital chips. Conventional AI platforms are a lot bulkier and typically are constrained to using large data servers operating in the cloud.

In addition, the NeuRRAM chip is highly versatile and supports many different neural network models and architectures. As a result, the chip can be used for many different applications, including image recognition and reconstruction as well as voice recognition.

The conventional wisdom is that the higher efficiency of compute-in-memory is at the cost of versatility, but our NeuRRAM chip obtains efficiency while not sacrificing versatility.

Currently, AI computing is both power-hungry and computationally expensive. Most AI applications on edge devices involve moving data from the devices to the cloud, where the AI processes and analyzes it. Then the results are moved back to the device. That’s because most edge devices are battery-powered and as a result only have a limited amount of power that can be dedicated to computing.

By reducing power consumption needed for AI inference at the edge, this NeuRRAM chip could lead to more robust, smarter and accessible edge devices and smarter manufacturing. It could also lead to better data privacy as the transfer of data from devices to the cloud comes with increased security risks.

On AI chips, moving data from memory to computing units is one major bottleneck.

It’s the equivalent of doing an eight-hour commute for a two-hour work day.

To solve this data transfer issue, researchers used what is known as resistive random-access memory, a type of non-volatile memory that allows for computation directly within memory rather than in separate computing units. RRAM and other emerging memory technologies used as synapse arrays for neuromorphic computing were pioneered in the lab of Philip Wong, Wan’s advisor at Stanford and the main contributor to this work. Computation with RRAM chips is not necessarily new, but generally, it leads to a decrease in the accuracy of the computations performed on the chip and a lack of flexibility in the chip’s architecture.

Compute-in-memory has been common practice in neuromorphic engineering since it was introduced more than 30 years ago. What is new with NeuRRAM is that the extreme efficiency now goes together with great flexibility for diverse AI applications with almost no loss in accuracy over standard digital general-purpose compute platforms.

A carefully crafted methodology was key to the work with multiple levels of “co-optimization” across the abstraction layers of hardware and software, from the design of the chip to its configuration to run various AI tasks. In addition, the team made sure to account for various constraints that span from memory device physics to circuits and network architecture.

Chip performance

Researchers measured the chip’s energy efficiency by a measure known as an energy-delay product or EDP. EDP combines both the amount of energy consumed for every operation and the number of times it takes to complete the operation. By this measure, the NeuRRAM chip achieves 1.6 to 2.3 times lower EDP (lower is better) and 7 to 13 times higher computational density than state-of-the-art chips.

Researchers ran various AI tasks on the chip. It achieved 99% accuracy on a handwritten digit recognition task; 85.7% on an image classification task; and 84.7% on a Google speech command recognition task. In addition, the chip also achieved a 70% reduction in image-reconstruction error on an image-recovery task. These results are comparable to existing digital chips that perform computation under the same bit-precision, but with drastic savings in energy.

Researchers point out that one key contribution of the paper is that all the results featured are obtained directly on the hardware. In many previous works of compute-in-memory chips, AI benchmark results were often obtained partially by software simulation.

The next steps include improving architectures and circuits and scaling the design to more advanced technology nodes. Researchers also plan to tackle other applications, such as spiking neural networks.

New architecture

The key to NeuRRAM’s energy efficiency is an innovative method to sense output in memory. Conventional approaches use voltage as input and measure current as the result. But this leads to the need for more complex and more power-hungry circuits. In NeuRRAM, the team engineered a neuron circuit that senses voltage and performs analog-to-digital conversion in an energy-efficient manner. This voltage-mode sensing can activate all the rows and all the columns of an RRAM array in a single computing cycle, allowing higher parallelism.

In the NeuRRAM architecture, CMOS neuron circuits are physically interleaved with RRAM weights. It differs from conventional designs where CMOS circuits are typically on the peripheral of RRAM weights. The neuron’s connections with the RRAM array can be configured to serve as either input or output of the neuron. This allows neural network inference in various data flow directions without incurring overheads in the area or power consumption. This in turn makes the architecture easier to reconfigure.

To make sure that the accuracy of the AI computations can be preserved across various neural network architectures, researchers developed a set of hardware algorithm co-optimization techniques. The techniques were verified on various neural networks including convolutional neural networks, long short-term memory, and restricted Boltzmann machines.

As a neuromorphic AI chip, NeuroRRAM performs parallel distributed processing across 48 neurosynaptic cores. To simultaneously achieve high versatility and high efficiency, NeuRRAM supports data parallelism by mapping a layer in the neural network model onto multiple cores for parallel inference on multiple data. Also, NeuRRAM offers model-parallelism by mapping different layers of a model onto different cores and performing inference in a pipelined fashion.

An international research team

The work is the result of an international team of researchers.

The UC San Diego team designed the CMOS circuits that implement the neural functions interfacing with the RRAM arrays to support the synaptic functions in the chip’s architecture, for high efficiency and versatility. Wan, working closely with the entire team, implemented the design; characterized the chip; trained the AI models; and executed the experiments. Wan also developed a software toolchain that maps AI applications onto the chip.

The RRAM synapse array and its operating conditions were extensively characterized and optimized at Stanford University.

The RRAM array was fabricated and integrated onto CMOS at Tsinghua University.

The Team at Notre Dame contributed to both the design and architecture of the chip and the subsequent machine learning model design and training.