A new machine-learning program accurately identifies COVID-19-related conspiracy theories on social media and models how they evolved over time—a tool that could someday help public health officials combat misinformation online.

A lot of machine-learning studies related to misinformation on social media focus on identifying different kinds of conspiracy theories. Instead, we wanted to create a more cohesive understanding of how misinformation changes as it spreads. Because people tend to believe the first message they encounter, public health officials could someday monitor which conspiracy theories are gaining traction on social media and craft factual public information campaigns to preempt widespread acceptance of falsehoods.

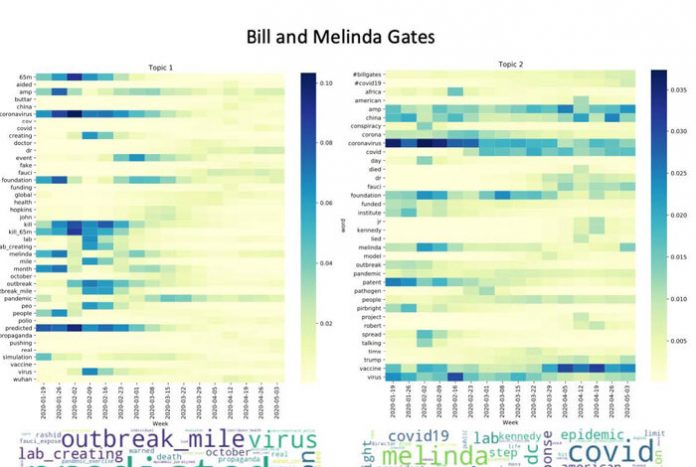

The study, anonymized Twitter data to characterize four COVID-19 conspiracy theory themes and provide context for each through the first five months of the pandemic. The four themes the study examined were that 5G cell towers spread the virus; that the Bill and Melinda Gates Foundation engineered or has otherwise malicious intent related to COVID-19; that the virus was bioengineered or was developed in a laboratory; and that the COVID-19 vaccines, which were then all still in development, would be dangerous.

“We began with a dataset of approximately 1.8 million tweets that contained COVID-19 keywords or were from health-related Twitter accounts,” said Dax Gerts, a computer scientist also in Los Alamos’ Information Systems and Modeling Group, and the study’s co-author. “From this body of data, we identified subsets that matched the four conspiracy theories using pattern filtering, and hand-labeled several hundred tweets in each conspiracy theory category to construct training sets.”

Using the data collected for each of the four theories, the team built random forest machine-learning, or artificial intelligence (AI), models that categorized tweets as COVID-19 misinformation or not.