The University of California, Los Angeles (UCLA) is enhancing optical neural network with improving design and taking an advantage of the parallelization and scalability of optical based computational systems.

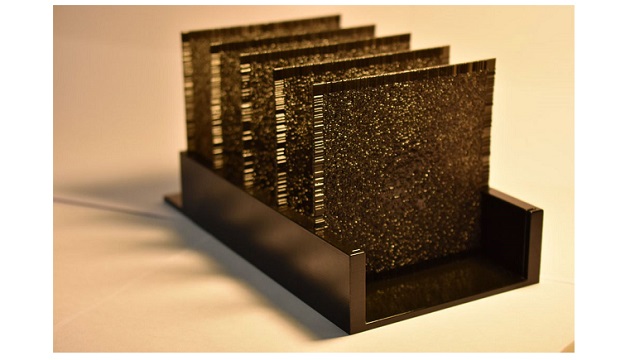

The system uses a series of 3D-printed layers with uneven surfaces that transmit or reflect incoming light. The layers have tens of thousands of pixel points (essentially artificial neurons) that form an engineered volume of material that computes all optically. Each object that is input has a unique light pathway through the 3D-fabricated layers. Detectors situated behind the layers are assigned to deduce what the input object is according to where the most light ends up after it has traveled through the layers.

The researchers increased the system’s accuracy by adding a second set of detectors so that each object type is represented with two detectors rather than one. In this differential detection scheme, each object class (e.g., boats, airplanes, cars, etc.) is assigned to a separate pair of detectors behind a diffractive optical network, and the class inference is made by maximizing the normalized signal difference between the photodetector pairs.

This differential detection scheme helped UCLA researchers improve the prediction accuracy for unknown objects that were seen by their optical neural network. Using a scheme involving 10 photodetector pairs behind five diffractive layers with a total of 0.2 million neurons, the researchers numerically achieved blind testing inference accuracies of 98.54%, 90.54%, and 48.51% for MNIST, Fashion-MNIST, and grayscale CIFAR-10 data sets, respectively.

Making further use of this parallelization approach, the researchers divided individual classes in a target data set among multiple jointly trained diffractive neural networks. Using class-specific differential detection in jointly optimized diffractive neural networks that operated in parallel, the simulations by the team achieved blind testing accuracies of 98.52%, 91.48%, and 50.82% for MNIST, Fashion-MNIST, and grayscale CIFAR-10 data sets, respectively, coming close to the performance of some of the earlier generations of all-electronic deep neural networks. While more recent electronic systems have better performance, the researchers suggest that all-optical systems have advantages in inference speed and low power, and they can be scaled up to accommodate and identify many more objects in parallel.

“Such a system performs machine-learning tasks with light-matter interaction and optical diffraction inside a 3D-fabricated material structure, at the speed of light and without the need for extensive power, except the illumination light and a simple detector circuitry,” professor Aydogan Ozcan said. “This advance could enable task-specific smart cameras that perform computation on a scene using only photons and light-matter interaction, making it extremely fast and power-efficient.”

Intelligent camera systems that can assess what they see according to the patterns of light that run through the neural network’s 3D structure of engineered materials could be the ultimate result of these advances.