The advanced driver-assistance systems (ADAS) that are making today’s cars safer and enabling the emergence of autonomous vehicles are challenging the automotive electronics industry to achieve new levels of complexity, performance, and safety. The unique demands of these applications has led to the extensive use of complex ASICs that combine digital processing, analogue, RF, and power management functions in a single silicon die. As a result, critical safety features are managed on-chip.

While any non-entertainment automotive electronics are considered mission-critical, ADAS systems are an especially critical link in the safety chain. A typical ADAS element is responsible for integrating one or more computing elements with several base technologies (i.e. radar/LIDAR, GPS, and wireless communications) to produce actionable, virtually error-free information that enable a driver assist or autopilot system to operate with an extremely high degree of safety. In addition, they must be able to meet the auto industry’s stringent space, power, and cost constraints. To meet these challenges, many automotive OEMs and system manufacturers are using SoCs as the foundation of their ADAS systems and other complex automotive products.

Even before safety management is considered, the development of silicon chips that deliver enough processing power to run complex, multi-threaded embedded software is a considerable challenge unto itself. Adding the safety constrains to the development of these ASICs, SoCs that perform mission-critical driver assist or autopilot functions bring several more types of skills to what was already an interdisciplinary effort.

To produce systems for these applications, where, like human spaceflight, “failure is not an option,” designers and design managers must rethink and consider adopting new approaches to the hardware and software development methodologies they’ve relied on for many years.

Industry standards

For years, many aspects of automotive electronics were governed by IEC 61508, a multi-purpose document governing the design of safety-critical electronics in a wide range of applications. IEC 61508 has been complemented and, in many ways surpassed, by the automotive-application-specific International Standards Organization (ISO) 26262, introduced in 2011. The shift from IEC 61508 to ISO 26262 was a result of the automotive sector’s high production volumes and growing reliance on distributed development efforts that span multiple suppliers.

Originally broken into 10 parts, ISO 26262 was dedicated to covering the different aspects across the product life cycle: from concept onwards, with Part 5 dedicated to hardware development. Since its initial publishing, however, there were numerous critics from the electronics industry who were concerned that the standard did not properly address issues related to IC development and safety of the intended function (SOTIF) management.

In response, the ISO added Part 11 last year, which is dedicated to semiconductor development. The new section offers extensive guidance on how IC design must be interpreted within the ISO 26262 framework. Recently, ISO 26262 has been complemented with the standard ISO/PAS 21448. Published in 2019, the standard addresses SOTIF in depth, covering topics such as systems misuse from human errors.

Collectively, these two updates (along with years of learning and refinement), give the automotive industry a comprehensive set of standards to tackle electronic system safety, with most automakers stipulating their ECU tier-1 suppliers follow the ISO 26262 compliance for safety-relevant systems.

Status of ADAS and autonomous systems

The consequences of an autonomous vehicle and ADAS failure have already been well documented. Last year saw the first fatality involving a Level-3 (conditionally autonomous) vehicle, with a pedestrian killed by a self-driving Uber. There have been five Level-2 (partially autonomous) vehicle fatalities since 2016, all involving a Tesla running Autopilot.

Looking first at Level-1 systems, a 2017 analysis of 5,000 US accidents suggested ADAS technologies such as lane departure and blind spot warnings cut the rate of crashes by 11% and the rate of injury by 21%.

As for Level 2, yes there have been fatalities, but those five deaths need to be put into context. First of all, Tesla stipulates that the car is not qualified for hands-off operation and that the driver must be in a position that allows them to immediately assume physical control of the vehicle whenever its Autopilot is running. It’s also important to remember that, in November 2018, Tesla reported its Autopilot semi-self-driving technology had been engaged for 1 billion miles (1.6Bn km) and, during that time, there had been three fatalities – resulting in a remarkable 0.3 fatalities per 100 million miles.

In comparison, US human-driven vehicles produced 1.18 fatalities per 100 million miles in 2016. Similarly, in April of 2019 Tesla reported that during Q1 of 2019 their cars registered one accident per 2.87 million miles (4.59m km) when running Autopilot, versus one per 1.76 million miles (2.82m km) when driven without Autopilot running.

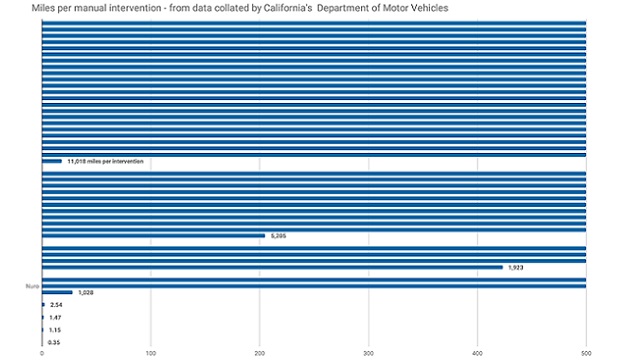

To measure the state of Level 3+ autonomous driver software, we can look to California, which requires autonomous vehicle manufacturers to record and publish the number of manual interventions made on its roads each year and the distance travelled (Figure 1). This data shows that the leading firm, Waymo (formerly Google’s autonomous vehicle division), required only one intervention per 11,000 miles (17600 km). However, there is a big range between developers, with the worst performing company’s vehicles requiring three interventions per mile (2 per km).

So, what types of additional efforts are required when developing safety critical ICs for ADAS and autonomous driving systems? As we’ll see, these efforts need to be applied primarily to project management, development, documentation, and tools.

At the top level, a safety critical design has to both provide an optimal solution to the requested system functionality and document how this solution can fail, stating the consequences of doing so as well as how the risk has been mitigated to an acceptable level.

ISO 26262 (like all safety standards) provides: a) a framework for the analysis of a system and how it could malfunction, b) a method to document that sufficient measures have been implemented to guarantee the system safety, and c) the design and management team with a consistent process, that has mechanisms to ensure adequate documentation and traceability.

Developing SoCs under the guidance of ISO 26262/21448 requires the coordination of multiple disciplines, including system architecture, hardware/software partitioning, design verification, and production planning.

During hardware development, the traditional simulation-based approach (from RTL descriptions, or schematics) must be supplemented with a special study that identifies operational scenarios in which a series of failing sub-blocks can combine to violate a safety goal, as well as potential solutions to each problem. This type of analysis requires the quantification of random- and soft-errors, and their impact in the different chip structures; a formidable task, even in a relatively “small” device, containing less than 100 million gates.

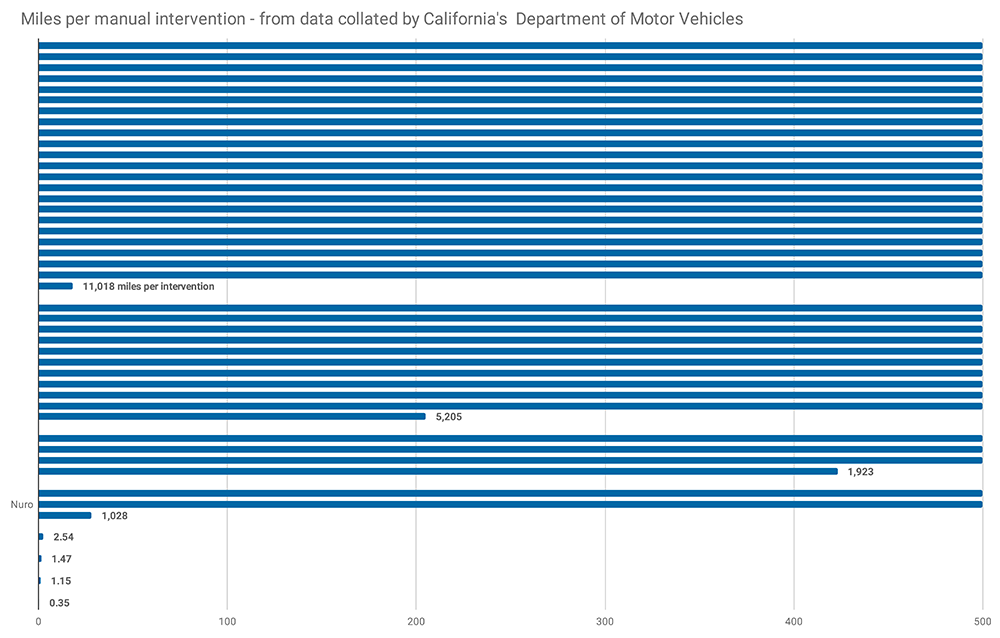

Software engineering for safety-critical applications poses similar challenges. The recent software-induced fatalities in several Boeing 737 Max crashes are only the most notorious examples of other digital time bombs that have wreaked havoc in commercial aircraft. Similarly, the first US fatality involving a self-driving car was caused by a software error that misinterpreted both imaging data (believing a white trailer crossing the road was the sky) and radar data. The software engineer’s job is further complicated for ADAS applications that also involve ethical considerations, and moral issues that must be factored into the design equation. Figure 2 provides a high-level view of some of the considerations involved with adding ethical and moral issues to the design process.

FIGURE 2 – How universal are ethics behind autonomous vehicle decisions? (Adapted from data published in Nature 2018)

Another critical stage in software development is converting live software into a firmware image that can be embedded within the ROM of an SoC. Achieving full product compliance to the governing safety specifications requires a robust process for specifying, coding, documenting, and verifying the software. When preparing to generate a firmware image, it’s advisable to review the currently recommended software coding practices. Some of the more notable suggestions include: restrictions with certain languages (like MISRA-C), use of quality metrics for code or condition coverage, and individually testing of every software unit against its requirements. All these steps are necessary for achieving both ISO 26262 and IEC 61508 compliance.

Safety standards also touch on specific organizational aspects. The principle that “for any work product, the verifier must be independent from the author” is stipulated across safety norms, however the stated level of independence varies between these two – and other standards, such as DO-167, for airborne electronics, and ISO 62304, for medical systems.

The final step in the process is demonstrating to a customer or project partner that an SoC is safe. Safety norms require that the involved projects undergo an independent assessment, with specific variants depending on the safety level. An independent auditor will be needed to verify the safety case, i.e. the collection of documents and work products that document a project has been properly executed, according to the relevant standard.

Considerations in developing safety critical SoCs

Many companies will adhere to international quality standards with an ISO 9001:2015 certificate that guarantees all the business activities are performed in line within rigorous processes. However, the compliance to safety standards, like ISO 26262 or IEC 61508, needs something more.

A key step in any safety critical ASIC or SoC is the selection of the EDA tools, for logic synthesis, simulation, physical implementation, and verification, which should have already passed the validation process requested by the governing standards

Similarly, a V-shaped model should be adopted for developing safety critical SoCs, and adhered to from conception to final production. This will enable robust processes for documentation management, configuration management, and traceability, as well as ensuring strict hardware and software verification processes are in place, with dedicated approval meetings involving all the project stakeholders.

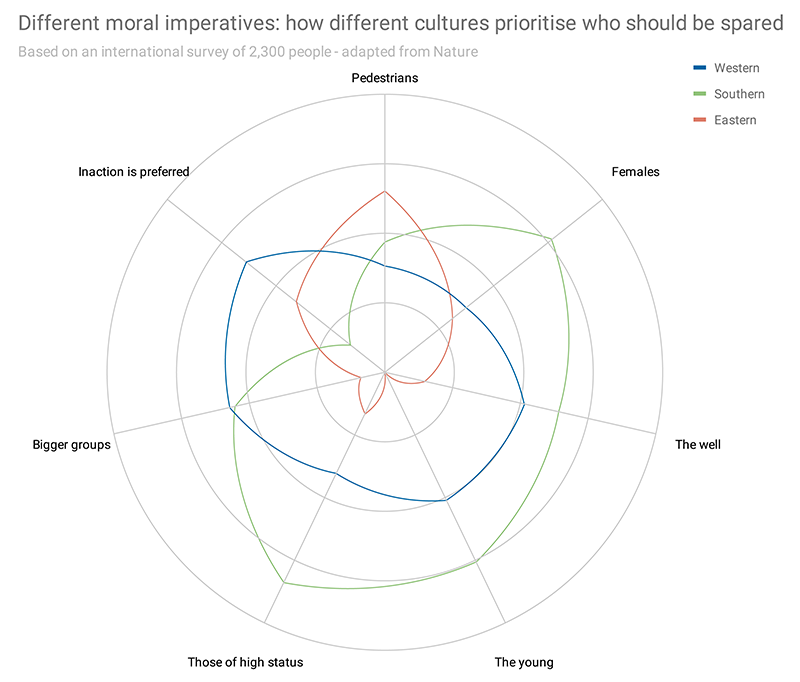

Every safety-critical project needs a thorough safety analysis process. The designer’s expertise plays a crucial role here, creating an understanding of what can go wrong in a circuit or IP block; putting in place the necessary mitigation strategies (Figure 3).

Figure 3 The safety analysis process describes the work flow, and leads to an independent third party confirmation, as often requested by the relevant norms.

To ensure that the selected safety measures are effective, ISO 26262 (and all credible safety-related standards) require an additional step. In the case of a complex SoC, this is accomplished through the use of dedicated fault injection software tools. These allow the simulation of common faults that occur (both random faults and soft errors) and verify that they are properly detected and/or corrected in a way that would still produce safe system behavior.

By definition, SoCs include processing elements, such as MCUs or DSPs, and their behavior often relies on a software image coded inside the silicon die in the form of a ROM, OTP, or a similar integrated logic element. Debugging embedded software has always been a challenging activity, and this increases significantly when the code image is inside the silicon it’s running on, making many traditional debugging tools less effective, or even unusable. Instead, co-simulation models are required for the hardware and software to provide the developer with a holistic observation of the system’s behavior, even before the actual silicon is available.

Vendor verification is the last key aspect in developing safety critical systems. If your device will be used in applications governed by ISO 26262 or a similar standard, the silicon fabs and subcontractors you use must have a demonstrated capability to manufacture parts to automotive safety standards, and should use a communication flow with suppliers and customers that adheres to the strict rules relating to safety plans and development interface agreements (DIA).

As for the internal organization, a project-independent functional safety (FuSa) management structure is needed, which gives the necessary independence for establishing FuSa processes and provides the required training and support to all the engineers involved in safety critical products.

For more information, visit: www.ensilica.com