The anthropomorphic sentiment suggests that Homo sapiens have been around for about six million years. However, think about it like this, if the earth was created one year ago, the human species, then, would be 10 minutes old. The industrial era started 2 seconds ago. We, the most evolved and intelligent species are actually recently arrived guests on this planet.

From fire and brimstones, Human intelligence has been the primary catalyst in achieving everything that we have achieved and everything we care about. It is clearly evident that the evolution cycle of human beings and the world around them crucially depends on some relatively minor changes that made the human mind. And that reverberates, of course, is that any further changes that could significantly change the substrate of thinking could have potentially enormous consequences.

Well, we have already seen another big- bang that has caused a profound change in that substrate and currently, we’re in the midst of a revolution. There has been a major paradigm shift from rule-based AI to machine learning and from human-machine interface to machine to machine interface, where machines can actually learn from patterns and algorithms and perpetually can get better just like a human infant. Reinforcement learning is the process by which the machine trains itself by trial and error.

We encounter AI every day and the technology is making rapid strides. This can be the new evolution that can fundamentally mutate lives on our planet. Artificial intelligence has the potential to revolutionize every aspect of daily life; work, mobility, medicine, the economy and communication.

Checkmate on Humanity?

Think about it, you watch a video on Facebook or a reel on Instagram and then gradually what happens you start getting the suggestion of some new video of the same category that you watched earlier. And then something frightening happens, a chain of automated suggestions starts coming in your feeds and now you are trapped in that vicious cycle of killing hours of your time by getting yourself indulged in that cycle.

You are continuously watching and scrolling without even realizing that somewhere there is artificial intelligence and an algorithm that is reading and analysing your scrolling pattern, your watch time, practice your mind and catering you exactly that thing which will compel you to spend more and more time on that particular app. In a way, the intelligence is telling the user what to watch. And this happens all the time. You get suggestions when you go online shopping, or on YouTube. And if not regulated, this can be checkmate on human intelligence.

GPT-3 (Generative Pre-Trained Transformer 3) is arousing tremors across the Silicon Valley

Just imagine an AI that can write anything. You feed it poems from a particular poet and it will write a new one with the same rhythm and genre. Or it can write a news article (guardian has already done that). It can read an article, answer questions from the information in the article and even summarize the article for you and not to mention that it can generate pictures from the text.

GPT-3 is a deep learning algorithm that produces human-like text. It is the third generation language prediction model created by San Francisco start-up “OpenAI”, which was co-founded by Elon Musk. This programme is better than any prior programme at producing text which could have been written by a human. The reason that it is a quantum leap is that it may prove useful to many companies and has great potential in automating tasks.

It can write java codes, by simply putting text descriptions in it. Or, how about making a moke-up website by just copying and pasting a URL with a description.

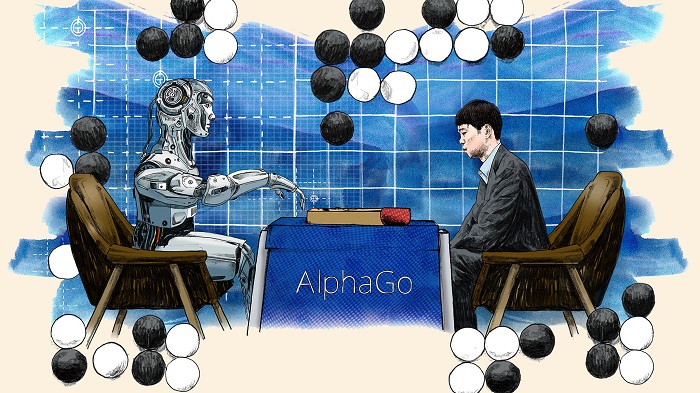

It all started with a Game.

“Go” is arguably one of the most complex games in existence, its goal is simple; surround more territory than your opponent. This game has been played by humans for past 2,500 years and is thought to be the oldest board game still being played today. The complexity level of the game goes far beyond the very fact that there are more possible moves in the game of Go than there are atoms in the universe.

However, it’s not only humans that are playing this game now. In 2016, Google Deep Mind’s AlphaGo beat 18-time world champion Lee Sedol in four out of five games. Now, normally a computer beating a human at a game like chess or checkers wouldn’t be that much impressive, but Go is different. In countries where this game is very popular, like china, Japan and South Korea, to them it is not just a game. It’s like how you learn strategy. It has an almost spiritual component.

This game is far beyond the reach of predictions and it cannot be solved with brute force. There are over 10 to the 170 possible moves in the game. To put that into perspective, there are only 10 to 80 atoms in the observable universe. AlphaGo was trained using data from real human Go games. It ran through millions of games and learned the techniques used and even made up new ones that no one had ever seen.

However, a year after AlphaGo’s victory over Lee Sedol, a brand new AI called AlphaGo zero beat the original AlphaGo with 100 to 0 games. 100 games in a row. And the most impressive part about that it learned how to play with zero human interaction. It was a huge victory for deep mind and AI. It was the prime example of one type of intelligence beating another type of intelligence.

Artificial intelligence had proven that it could marshal a vast amount of data, beyond anything any human could handle, and use it to teach itself how to predict an outcome. The commercial implications are enormous.

The arguments for the imminent arrival of human-level AI typically appeal to the progress we’ve seen to date in machine learning and assume that it will inevitably lead to superintelligence. In other words, make the current models bigger, give them more data, and voilà: AGI.

Will Advanced AI Turn into Terminators and will take over Human Civilization?

At the Ford Distinguished Lectures in 1960, the economist Herbert Simon proclaimed that within 20 years machines would be at par in performing any task achievable by humans. In 1961, Claude Shannon — the founder of information theory — forecasted that science fiction style robots would emerge within 15 years. The mathematician I.J. Good conceived of a runaway “intelligence explosion,” a process whereby smarter-than-human machines iteratively improve their own intelligence. Writing in 1965, Good predicted that the explosion would arrive before the end of the twentieth century. In 1993, Verner Vinge coined the beginning of this explosion “the singularity” and stated that it would arrive within 30 years. Ray Kurzweil later declared a law of history, The Law of Accelerating Returns, which predicts the singularity’s arrival by 2045. More recently, Elon Musk has claimed that superintelligence is less than five years away, and academics from Stephen Hawking to Nick Bostrom have shown concerns regarding the dangers of rogue AI.

The hype is not limited to a handful of public figures. Every few years there are surveys of researchers working in the AI field asking for their predictions of when we’ll achieve artificial general intelligence (AGI) — machines as general-purpose and at least as intelligent as humans. Median estimates from these surveys give a 10% chance of AGI sometime in the 2020s, and a one-in-two chance of AGI between 2035 and 2050. Leading researchers in the field have also made startling predictions. The CEO of OpenAI writes that in the coming decades, computers “will do almost everything, including making new scientific discoveries that will expand our concept of ‘everything’,” and the co-founder of Google Deepmind that “Human-level AI will be passed in the mid-2020s.”

These predictions have consequences. Some have called the arrival of AGI an existential threat, wondering whether we should halt technological progress in order to avert catastrophe. Others are pouring millions in philanthropic funding towards averting AI disasters.

Mayank Vashisht | Sub Editor | ELE Times