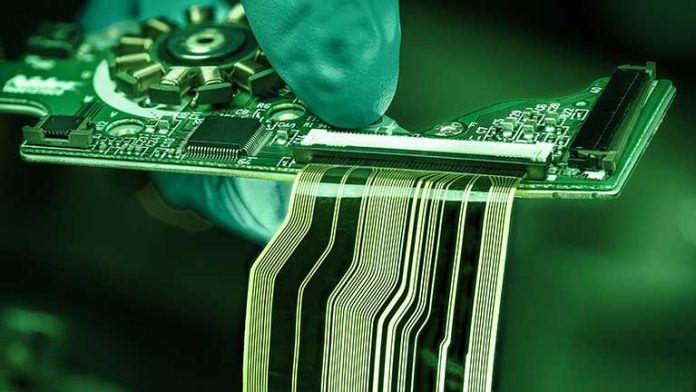

Artificial Intelligence (AI) applications typically involve handling large datasets, necessitating multiple distributed CPUs and GPUs communicating in real time. This setup is a hallmark of high-performance computing (HPC) architectures. Routing high-speed digital signals between processing elements necessitates chip-to-board and board-to-board connectivity. To meet high-speed requirements, communication protocols and physical standards have been developed based on signal integrity standards, which also ensure interoperability among suppliers. Occasionally, non-standard connectors are used due to specific form-factor requirements or other mechanical constraints. In these cases, their suitability can be assessed by comparing their specifications with industry-standard parts.

AI Data Bandwidth in Signal Integrity

When considering signal integrity, bandwidth and impedance are crucial electrical characteristics. Pin count, materials used, and mounting methods are significant mechanical considerations that impact performance and reliability. As HPC systems consume more power, contact resistance becomes increasingly important for improving data centre power efficiency.

For CPU connectivity, solderless interfaces often take the form of land grid array (LGA) or pin grid array (PGA) packages. Intel pioneered the LGA, using it for almost all its CPUs. Processors not designed to be user-replaceable might use a ball grid array (BGA), which connects components to the printed circuit board using solder balls. This is common for GPUs and some CPUs. The rate of data transfer between memory and a processor is a key factor in system performance. The latest development in random access memory (RAM) is the shift from DDR4 to DDR5, with DDR4 supporting data rates up to 25.6 Gbps and DDR5 up to 38.4 Gbps.

This evolution influences chip interface design. The latest LGA 4677 IC sockets offer link bandwidth up to 128 Gbps, typically supporting 8-channel DDR5 memory. These tightly spaced connection points can carry up to 0.5 A, reflecting the power demands of modern high-performance processors. Dual inline memory modules (DIMM) DDR5 memory sockets now support up to 6.4 Gbps bandwidth, with mechanical designs that save space and improve airflow around components on the printed circuit board.

Connecting AI Beyond the Board

PCI Express

Most processor boards feature several PCI Express (PCIe) slots for connectors, with slot types ranging from x1 to x16. The largest slots are typically used for high-speed GPU connectivity. The PCIe protocol standard allows up to 32 bidirectional, low latency, serial communications “lanes,” each consisting of differential pairs for transmitting and receiving data. PCIe 6.0, announced in January 2022, doubles the bandwidth of its predecessor to 256 Gbps, operating at 32 GHz, although hardware availability is currently limited.

InfiniBand

InfiniBand, common in HPC clusters, offers high speed and low latency, with maximum link performance of 400 Gbps and support for many thousands of nodes in a subnet. It can use board form factor connections and supports both active and passive copper cabling, active optical cabling, and optical transceivers. InfiniBand is complementary to Fibre Channel and Ethernet protocols but offers higher performance and better I/O efficiency. Common connector types for high-speed applications include QSFP+, zQSFP+, microQSFP, and CXP.

Ethernet

High-speed Gigabit Ethernet is increasingly common in HPC, with main connector types including CFP, CFP2, CFP4, and CFP8. CFP stands for C-form factor pluggable, with CFP2/CFP4 offering up to 28 Gbps per lane and supporting 40 Gbps and 100 Gbps Ethernet CFP-compliant optical transceivers. CFP8 connectors support up to 400 Gbps connectivity with 16, and 25 Gbps lanes.

Fibre Channel

Fibre Channel, specific to storage area networks (SANs), is widely deployed in HPC environments, supporting both fibre and copper media. It offers low latency, high bandwidth, and high throughput, with current support of up to 128 Gbps and a roadmap to 1 Terabit Fiber Channel (TFC). Connector types range from traditional LC to zQSFP+ for the highest bandwidth connections.

SATA and SAS

Serial Attached Technology Attachment (SATA) and Serial Attached SCSI (SAS) are protocols designed for high-speed data transfer, primarily used to connect hard drives and solid-state storage devices within HPC clusters. Both have dedicated connector formats with internal and external variants. SAS is generally preferred for HPC due to its higher speed (up to 12 Gbps) but is more expensive than SATA. Often, the operating speed of the storage device limits data transfer rates.

Passive Components and Powering AI Processors

As processing speed and data transfer rates increase, so do the demands on passive components. Powering AI processors in data centres require ferrite-cored inductors for EMI filtering in decentralized power architectures to carry tens of amps. Low DC resistance and low core losses are essential. Innovations like single-turn, flat wire ferrite inductors, designed for point-of-load power converters, are rated up to 53 A with maximum DC resistance ratings of just 0.32 mOhms, minimizing losses and heat dissipation.

High-performance processing necessitates high current and power rails with good voltage regulation and fast response to transients. Designers must consider frequency-dependent characteristics beyond capacitance and voltage ratings. Aluminium electrolytic capacitors, traditionally used for high capacitance values, are now often replaced by polymer dielectric and hybrid capacitors for their lower equivalent series resistance (ESR) and longer operating life.

The high power consumption of data centres has increased the voltage used in rack architectures from 12 V to 48 V for improved power efficiency. 48 V-rated aluminium polymer capacitors designed for high ripple current capabilities (up to 26 A) are available in values up to 1,100 µF, with some manufacturers offering rectangular shapes suitable for stacking into modules.

Multilayer ceramic capacitors (MLCCs) are widely used in power supply filtering and decoupling due to their low ESR and ESL. Continuous improvements in volumetric efficiency have resulted in components like the 1608M (1.6 mm x 0.8 mm) size MLCC with a 1 µF/100 V rating, saving significant volume and surface area compared to previous models.

Recent developments in MLCC packaging technology have enabled bonding without metal frames, maintaining low ESR, ESL, and thermal resistance. Ceramic capacitors with dielectric materials that exhibit minimal capacitance shift with voltage and predictable, linear capacitance changes with temperature are preferred for filtering and decoupling applications.

Conclusion

The need for high processor performance in AI systems imposes specific demands on passive and electromechanical components. These components must be selected with a focus on high-speed data transfer, efficient power delivery, thermal management, reliability, signal integrity, size constraints, and the specific requirements of AI applications, ensuring the electronic system meets the demands of AI workloads effectively and reliably.

Story Credit: Avnet